National Space Technology Development Priorities: NASA’s Revised Strategy

“Discover how NASA is reshaping the future of space exploration with its revised national space technology development priorities. This article ...

Read more

NASA New Plan for Mars Sample Return Mission

“Mars Sample Return mission, NASA Mars mission, Martian soil samples, interplanetary exploration, Mars rocks, space technology, NASA ESA collaboration, future ...

Read more

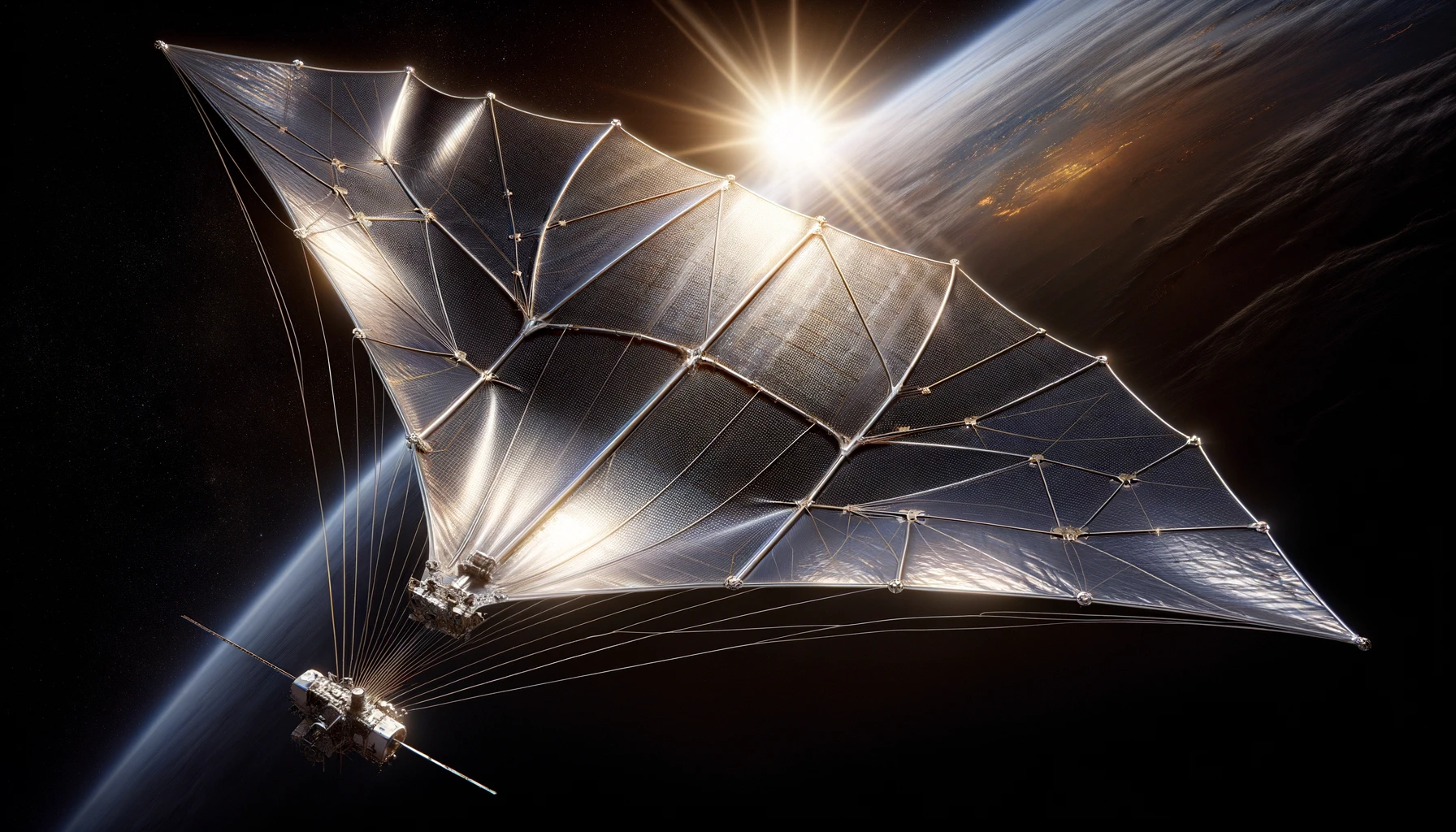

NASA Next-Generation Solar Sail Boom Technology: A Leap Towards Advanced Space Propulsion

“Discover NASA’s groundbreaking solar sail boom technology, set to transform space travel. This article explores the features, development, and potential ...

Read more

NASA’s TESS Mission Pauses for Vital Upgrades: Enhancing Exoplanet Discovery

“NASA, TESS, exoplanet discovery, space telescope, astronomical research, satellite maintenance, science observations, space mission upgrades, celestial observatory” “Explore why NASA’s ...

Read more

SpaceX Launches Space Force Weather Satellite: A New Era for a Legacy Program

“Explore the groundbreaking launch of a new Space Force weather satellite by SpaceX, designed to enhance global weather monitoring. This ...

Read more

Unlocking the Mysteries of Aurora: Insights from Ultra-fast Electron Measurements

Explore the groundbreaking realm of aurora research through ultra-fast electron measurements in multiple directions. This detailed article delves into the ...

Read more

NASA Unveils New Strategy for Space Sustainability Amid Orbital Risks

NASA has introduced a comprehensive Space Sustainability Strategy to address the challenges of the increasingly congested and hazardous conditions in ...

Read more

NASA New Lunar Time Zone: A Giant Leap for NASA and Global Space Collaboration

NASA lunar time zone, White House directive moon time, Lunar exploration coordination, International space collaboration, Moon mission timekeeping, Space exploration ...

Read more

Loral O’Hara and Team Triumph: A Journey of Discovery and Return from the ISS

“Loral O’Hara and Team Triumph: A Journey of Discovery and Return from the ISS A Historic Mission Comes to an ...

Read more

NASA Eclipse Mission: Rockets to Probe Sun’s Corona during Total Solar Eclipse on April 8 2024

NASA, total solar eclipse, solar research, Sun’s corona, solar wind, space weather, astronomical event, sounding rocket program, space science, solar ...

Read more

Advancing Lunar Exploration: NASA Selects Three Companies for Artemis Rover Designs

NASA, Artemis program, lunar rover, Intuitive Machines, Lunar Outpost, Venturi Astrolab, lunar exploration, space technology, moon missions, commercial spaceflight “NASA ...

Read more

A Home for Astronauts around the Moon: Gateway to Deep Space Exploration

As humanity’s gaze extends beyond Earth, the dream of establishing a permanent presence in space draws closer to reality. The ...

Read more

Unveiling the Mysteries of Jupiter’s Moon: NASA Europa Clipper Mission

Nasa Europa Clipper mission, NASA Jupiter exploration, Icy moon Europa, Spacecraft environmental testing, Outer solar system exploration, Life beyond Earth, ...

Read more

Unveiling the First Instruments for Artemis Moon Mission

In a monumental stride towards lunar exploration, NASA has officially announced the selection of the first set of scientific instruments ...

Read more

Uncovering Mars’ Watery Past: Curiosity Explores Gediz Vallis Channel

NASA, Curiosity Rover, Mars Exploration, Gediz Vallis Channel, Ancient Water on Mars, Martian Geology, Mount Sharp, Mars Climate History, Space ...

Read more